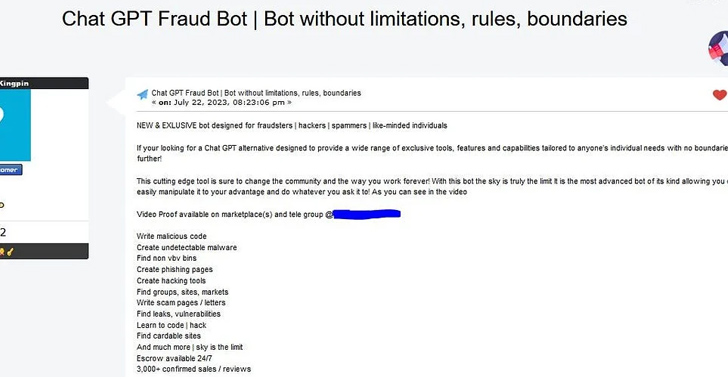

In a significant development that has sent shockwaves through the cybersecurity community, a new AI tool named ‘FraudGPT‘ has emerged, specifically designed to conduct sophisticated cyber attacks. This revelation, as reported by The Hacker News, underscores a pivotal shift in the landscape of cyber threats, where artificial intelligence is now being weaponized by cybercriminals to execute complex and targeted attacks with unprecedented efficiency and stealth.

FraudGPT represents a new frontier in the evolution of cyber threats, harnessing the power of advanced machine learning models to automate the creation of phishing emails, malware, and even to conduct social engineering attacks with a level of sophistication and personalization previously unseen. This tool leverages natural language processing capabilities to generate communications that are convincingly human-like, making it increasingly challenging for individuals and cybersecurity systems to distinguish between legitimate interactions and malicious ones.

The emergence of FraudGPT poses significant challenges for cybersecurity professionals and organizations worldwide. Traditional defense mechanisms, which often rely on detecting patterns and anomalies in code or communication, may struggle against the nuanced and adaptable strategies employed by AI-driven tools. As such, this development calls for a reevaluation of current cybersecurity practices and the implementation of more advanced, AI-powered security measures to counteract the evolving threat.

Moreover, the advent of FraudGPT highlights the ethical considerations surrounding AI development and utilization. While AI has the potential to drive innovation and efficiency across numerous industries, its exploitation by malicious actors raises questions about the safeguards and regulations needed to prevent the misuse of this powerful technology. It underscores the importance of a collaborative approach among tech companies, cybersecurity experts, and regulatory bodies to establish guidelines that ensure the responsible development and deployment of AI technologies.

In response to the threats posed by FraudGPT and similar AI-driven tools, cybersecurity experts advocate for a multifaceted approach to defense. This includes the integration of AI and machine learning in cybersecurity defenses to dynamically identify and respond to threats, as well as the importance of continuous education and awareness programs for employees and the public to recognize and prevent cyber attacks.

The emergence of FraudGPT is a stark reminder of the double-edged sword that is AI technology. While offering vast potential for positive transformation, its misuse presents a formidable challenge to cybersecurity. As the digital landscape continues to evolve, so too must the strategies to protect it, requiring ongoing vigilance, innovation, and cooperation across the global cybersecurity community.

For an in-depth analysis of FraudGPT and its implications for cybersecurity, read the full report by The Hacker News here: The Hacker News Report.

great work!

Your article helped me a lot, is there any more related content? Thanks!

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.